.webp)

.webp)

.webp)

.webp)

When you hear “HIPAA,” you probably think of doctors’ offices and hospitals. However, for law firms dealing with personal injury, medical malpractice, or workers' compensation cases, the same rules apply because they often handle sensitive health information that must be kept confidential to protect client privacy.

It’s all about putting specific, practical safeguards in place to keep your clients’ sensitive health information locked down, a duty that has become far more complex with the rise of modern AI technology.

There’s a dangerous misconception floating around that HIPAA is a healthcare problem. It's not. The moment your personal injury firm receives a client's medical records for a case, you become what’s known as a 'Business Associate' under HIPAA law.

This legally binds your firm to the exact same data protection standards as the hospital that treated your client. The penalties for non-compliance aren’t just a slap on the wrist; we’re talking about staggering fines that can run into the millions, irreversible damage to your firm’s reputation, and even malpractice claims from clients whose private data was exposed. According to the U.S. Department of Health & Human Services, penalties for noncompliance can range from $100 to $50,000 per violation, with an annual maximum of $1.5 million.

The stakes have gotten even higher with the explosion of new AI tools. Many of the general-purpose AI models out there—like the ones powering public chatbots—were never built to handle confidential information. When an attorney or paralegal pastes a client’s medical history into one of these tools to get a quick summary, that sensitive data can be absorbed by the AI to train its future responses.

Practical Example: Imagine a paralegal uploads a client's detailed medical narrative into a generic AI chatbot to speed up their workflow. Without even realizing it, they could be committing a serious HIPAA violation if the AI provider doesn't have a Business Associate Agreement (BAA) in place. That client's diagnosis, treatment history, and personal identifiers are now part of the AI's training data, at risk of being exposed in an unrelated user's query.

A BAA is a necessary contract required for any vendor that handles protected health information.

"A law firm that fails to secure a BAA before using a cloud service or AI tool to process protected health information is gambling with both its finances and its reputation. It's a foundational step that cannot be skipped."

This technology gap highlights something every attorney needs to understand: not all AI is created equal. To maintain HIPAA compliance, attorneys should use law-specific AI rather than general models. These specialized platforms are designed with security and confidentiality baked in from the ground up.

Data breaches are a real and growing problem. In 2023 alone, the healthcare sector saw 725 major breaches that exposed over 133 million patient records. You can learn more about recent healthcare data breach statistics and see how these trends directly impact legal practices like yours.

To get a real handle on HIPAA compliance, you don’t need to get lost in dense legal jargon. The best way to approach it is by thinking of its core parts as concepts you already know from your legal practice. This mindset turns a complex set of regulations into clear, actionable duties for your firm.

At its core, HIPAA is built on three main pillars that every personal injury attorney must understand. Each rule covers a different part of data protection—from defining what information is confidential to dictating how you must act when things go wrong.

The HIPAA Privacy Rule is the foundation of patient confidentiality. Think of it as the "attorney-client privilege" but for health data.

This rule defines what Protected Health Information (PHI) is—basically any identifiable health information, from a diagnosis to a billing statement—and puts strict limits on who can see, use, or share it. For your firm, this means you can only use a client's PHI for purposes directly tied to their case, like building a demand letter or consulting a medical expert. Any other use requires explicit, written consent.

While the Privacy Rule sets the what and why of data protection, the HIPAA Security Rule dictates the how. It's your firm’s evidence handling protocol, but for digital files. This rule zeroes in on electronic Protected Health Information (ePHI) and requires three types of safeguards to protect it.

These safeguards work together to create a multi-layered defense system for your firm's data:

These three layers are not optional; they create the robust defense you need against unauthorized access.

To help you see how these rules apply directly to your firm's daily operations, here’s a quick breakdown:

This table simplifies the core responsibilities, showing that HIPAA is a fundamental part of your firm's risk management and ethical obligations.

The Security Rule isn't about building an impenetrable digital fortress. It's about demonstrating due diligence. Regulators want to see that you've identified your risks and put reasonable, appropriate measures in place to address them.

Finally, the Breach Notification Rule is your firm's "duty to inform" clause. If a breach of unsecured PHI happens, this rule mandates a clear and timely response. It’s not about if a breach might happen, but what you are legally required to do when it does.

The rule requires you to notify affected individuals without unreasonable delay, and no later than 60 days after discovering the breach. If a breach impacts more than 500 people, you must also notify the Secretary of Health and Human Services and, in some cases, prominent media outlets.

This transparency is non-negotiable for maintaining trust and fulfilling your legal duties. As state bars issue new guidance on technology, understanding these rules is more important than ever. You can learn more by reviewing the California State Bar's recent guidance on generative AI for lawyers.

Using a popular, consumer-grade AI model for legal work is like discussing a confidential case in a crowded coffee shop. You just never know who’s listening in. While these tools feel convenient, they hide a massive liability, creating a minefield for attorneys who are bound by strict HIPAA compliance.

Most general AI systems are built to learn from the data they process. When you type in a prompt, that information can be absorbed by the model and used to train its future responses. This "data training" isn't an obscure feature; it's often the default setting.

Practical Example: A paralegal pastes a detailed client medical summary into a public AI chatbot to quickly draft a narrative. That sensitive Protected Health Information (PHI) could now become part of the AI's enormous knowledge base. It's now at risk of being surfaced in someone else's query, anywhere in the world. That simple act, aimed at efficiency, could trigger a serious HIPAA violation.

The single most critical piece missing from almost all general-purpose AI tools is the Business Associate Agreement (BAA). A BAA is a legally binding contract that makes a vendor responsible for protecting any PHI they handle for your firm. It's an absolute must-have for HIPAA compliance.

Without a signed BAA, you have zero legal assurance that the AI provider is using the required safeguards to protect your client's data. This leaves your firm completely exposed. If a breach happens on their system, the responsibility—and the liability—lands squarely on you.

"When an AI tool does not offer a BAA, it is explicitly stating that it is not a suitable partner for handling protected health information. Relying on such a tool for legal work involving medical data is a direct violation of HIPAA's vendor management requirements."

The absence of a BAA is a red flag. It tells you the tool wasn't built for professional, confidential work. It’s a gamble no law firm can afford to take. You can learn more about why general AI isn't safe for legal work in our detailed article on the subject.

The risks don't stop at data training. Consumer-grade AI platforms often lack the specific security architecture that HIPAA demands, leaving vulnerabilities wide open.

Think about these built-in risks when using a non-compliant AI:

These aren't just hypotheticals. A 2023 IBM report, the "Cost of a Data Breach," found that the healthcare industry has the highest average breach cost of any sector, at nearly $11 million per incident. This reality makes it crystal clear: you need tools built with security as a priority, not an afterthought.

For law firms, the only responsible path forward is to use AI tools designed specifically for the legal industry. These platforms are built on a foundation of security and confidentiality from day one.

Providers of law-specific AI understand the legal and ethical duties you carry. They will readily sign a BAA and can provide clear documentation on their security measures, from data encryption and access controls to breach notification protocols. By choosing a compliant, law-specific AI, you're not just adopting new technology—you're upholding your duty to protect client confidentiality and securing your firm's ongoing HIPAA compliance.

After seeing the risks that come with using general AI tools, the next step is pretty clear: you have to pick technology partners who actually take security seriously. For a law firm, your best vetting tool isn't a long list of features—it's a legal document. This is where you move from just knowing there's a problem to putting a real, protective solution in place.

The absolute cornerstone of that solution is the Business Associate Agreement (BAA). Think of a BAA as your firm's legal shield. It's a non-negotiable contract required by HIPAA that makes your vendors legally responsible for protecting any Protected Health Information (PHI) they handle. If an AI provider isn't willing to sign a BAA, that’s your cue to walk away. The conversation is over.

This agreement legally binds the vendor to the exact same HIPAA standards your own firm must uphold, proving they have the necessary safeguards in place. It’s the only way to create a formal chain of trust and liability, protecting both your clients and your practice from the nightmare of a data breach.

When you're looking at new technology, especially AI platforms, you need to cut through the marketing fluff and ask direct, security-focused questions. A reputable provider built for the legal industry will have no trouble giving you straight answers.

Here’s a simple checklist to get you started:

These questions get right to the heart of HIPAA compliance. How confidently and transparently a vendor answers them tells you everything you need to know about their commitment to data security and whether they’re a partner you can trust.

Make the BAA the center of your vendor vetting process. When you do, a complex compliance headache becomes a simple business decision. Any vendor that truly values your firm's business will gladly give you the legal assurances you need to protect it.

Let's make this tangible. Say you need to summarize a client's medical records. If you use a general AI tool, you're likely pasting sensitive text into a public-facing platform. That data could be used to train their model, stored forever on servers in some unknown country, and is definitely not covered by a BAA. In that one action, you’ve just created a massive compliance risk.

Now, let’s look at an AI platform like ProPlaintiff.ai, which was built from the ground up for personal injury law firms.

When you use a law-specific tool designed for HIPAA compliance, the whole game changes. The platform operates under a strict BAA. Your data is processed in a secure, private environment and is encrypted at every single stage. Access is tightly controlled, and the entire system is designed to prevent data leaks, not invite them. The provider is your partner in protection, not a source of risk.

This distinction is everything. General AI tools are built for mass-market convenience and data harvesting. Law-specific AI is built for professional responsibility and data protection. Choosing the right one isn't just a "best practice"—it's a fundamental requirement for any modern law firm handling sensitive client information. By making smart technology choices, you give your firm the power to work efficiently without ever compromising your core ethical and legal duties.

Figuring out how to turn HIPAA rules into real-world practice can feel like a huge task, but it really just comes down to putting a few common-sense safeguards in place. A solid HIPAA compliance program is built on three pillars: administrative, physical, and technical controls. This checklist gives you clear, actionable steps you can take right now to strengthen your firm’s defenses and keep client data safe.

Don’t think of these safeguards as separate chores. They’re interconnected layers of security. Administrative policies guide your team, physical controls protect your office, and technical measures lock down your digital world. Together, they create a complete framework for protecting sensitive information.

Administrative safeguards are the policies and procedures that act as the foundation for everything else. They’re the "who, what, and when" of your security plan, making sure everyone on your team knows their role in protecting PHI.

Here are the essential steps to get started:

Next up are physical safeguards, which are all about controlling actual, tangible access to PHI. These measures stop unauthorized people from physically seeing or grabbing sensitive information from your office, workstations, or file rooms.

These simple but effective actions make a huge difference:

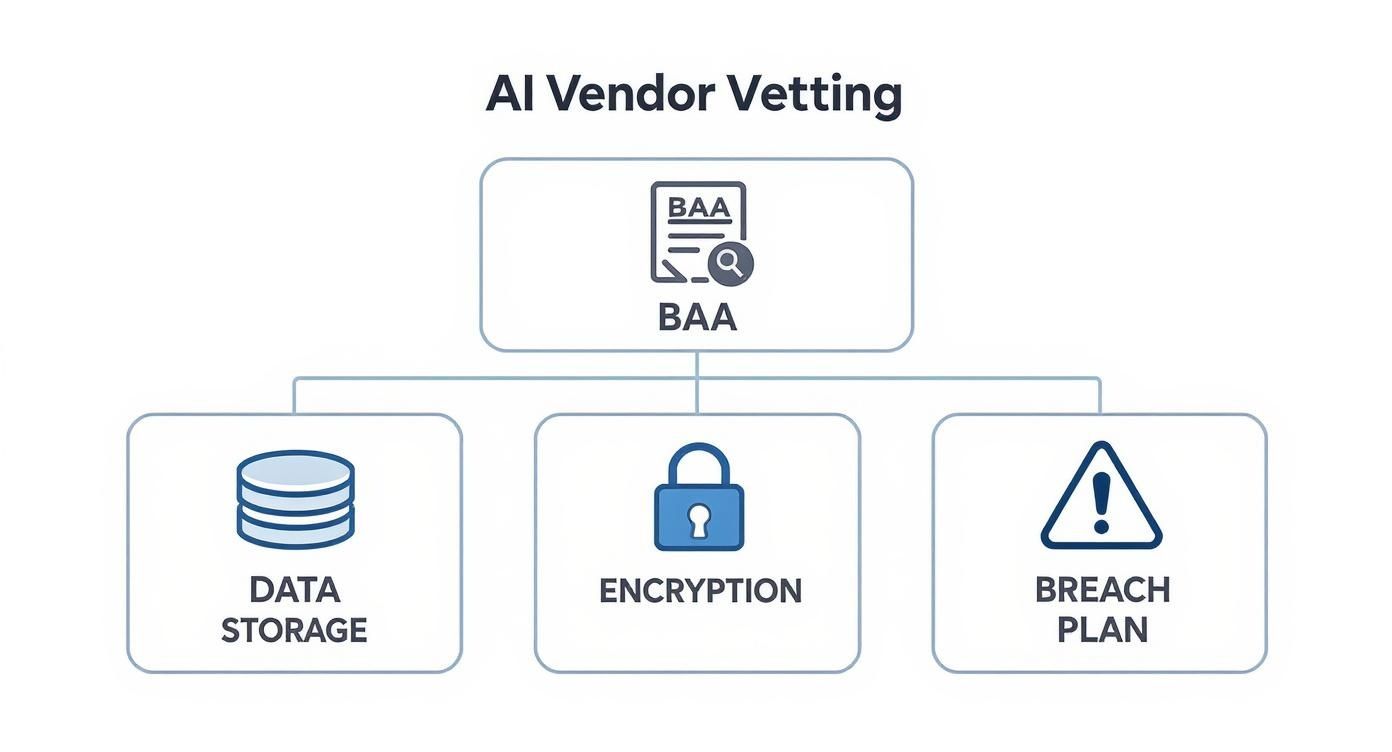

This infographic breaks down what to look for when vetting any new technology partner, starting with the absolute must-have: a Business Associate Agreement (BAA).

As you can see, a vendor’s contractual promise to protect data is the first hurdle. Only after that’s cleared should you dig into their technical security measures.

Finally, we have technical safeguards. These are the technology-based controls that protect electronic PHI (ePHI). In today's world, these are arguably the most important parts of your HIPAA compliance strategy. To get a handle on the technical side, it's often helpful to look into robust compliance solutions that can simplify the process.

Key technical controls include:

Let’s be honest: HIPAA compliance isn’t a one-time project you can check off a list. It's an ongoing commitment, a culture of security that needs to become part of your firm’s DNA. The checklists and safeguards are just the tools; the real work is embedding data protection into every decision you make.

Think of it less as a burden and more as a powerful way to stand out. Protecting client health information is one of our most fundamental ethical duties. When clients see you take their privacy this seriously, it builds a level of trust that goes far beyond the outcome of any single case.

The rise of AI has thrown a new wrinkle into our duty of confidentiality. Using a general-purpose AI model without a Business Associate Agreement (BAA) is a massive gamble with your client’s privacy. Many of these platforms use your inputs to train their models, which means sensitive PHI could easily be exposed.

For attorneys, this draws a bright ethical line in the sand. Choosing a law-specific AI tool over a generic one isn't just a tech decision—it’s a direct reflection of your commitment to your clients. Real HIPAA compliance means going deeper and understanding the ethics in legal AI use, then partnering with vendors who are just as dedicated to security as you are.

Protecting client data is no longer just about locked file cabinets and secure passwords. It's about making conscious, informed decisions about the technology you bring into your practice. Every tool you adopt is a reflection of your commitment to confidentiality.

So, where do you start? Audit your firm's technology, especially any AI tools you're using. By consciously choosing partners who put data protection first, you aren't just following rules—you're future-proofing your practice. Beyond the software, simple, practical measures are key; learning how to securely store and exchange sensitive files is a critical step in protecting patient information effectively.

This diligence is how you honor the trust your clients place in you, cementing your firm’s reputation as a responsible and forward-thinking practice.

When it comes to HIPAA compliance, the devil is always in the details. Attorneys often have practical questions about how these complex rules apply to the day-to-day work of a personal injury firm. Getting these answers right is the key to protecting your clients, your reputation, and your practice from serious liability.

Here are a few of the most common questions we hear from lawyers trying to navigate HIPAA in the real world.

Protected Health Information (PHI) is a much broader category than most people think. It’s not just the formal medical chart. For a law firm, PHI includes any piece of information you create or receive that can link a specific person to their health condition, treatment, or payment for healthcare.

Think about the files that land on your desk every single day. In a legal setting, PHI is everywhere:

Even something as simple as an invoice from a chiropractor becomes PHI the second it’s added to your case file.

Yes, absolutely. This is a huge misconception. Many firms believe HIPAA only applies if they handle a large volume of medical records, but that's not how it works. Your status as a Business Associate is determined by your function, not the number of cases you handle.

The moment you receive even a single medical record containing PHI to support a client's legal claim, your firm becomes a Business Associate under the law. This single action triggers the full weight of HIPAA compliance requirements. There is no minimum threshold or grace period.

You can, but it’s incredibly risky if you don’t manage it perfectly. Simply having a Google Drive or Dropbox account does nothing to ensure HIPAA compliance on its own.

To even begin using these services, you must have a signed Business Associate Agreement (BAA) with the provider. This is a legal contract that holds them accountable for protecting the PHI you store on their servers. But the BAA is just the starting line.

You are still on the hook for correctly configuring the platform’s security settings. This means digging into complex controls for data encryption, access permissions, and audit logs—technical steps that are easy to miss and can leave your firm wide open to a data breach and massive fines.

Because of this complexity, the smarter and safer path is to use a platform built from the ground up for secure legal work.

Ready to stop worrying about the compliance risks of generic software? ProPlaintiff.ai is the agentic AI operating system built for personal injury firms, with HIPAA compliance at its core. Securely generate demand letters, medical chronologies, and case summaries without putting client data at risk.

Discover how ProPlaintiff.ai can protect your firm and boost your efficiency.